Lost in the Middle - A small test

Basic test of GPT re-ordering options for a multiple choice question

Photo by Charlie Wollborg on Unsplash

I worked recently on a project where the primary objective was to construct a recommendation system, and we started testing GPT for multiple choice questions.

The goal was to see whether GPT was able to correctly rank options and to compare these options with what experts would recommend.

Beside the ranking, we also asked for a confidence level.

Very quickly we discovered that the results were influenced by the order in which we provided the options. This is consistent with this great study done on the topic, Lost in the Middle: https://arxiv.org/abs/2307.03172

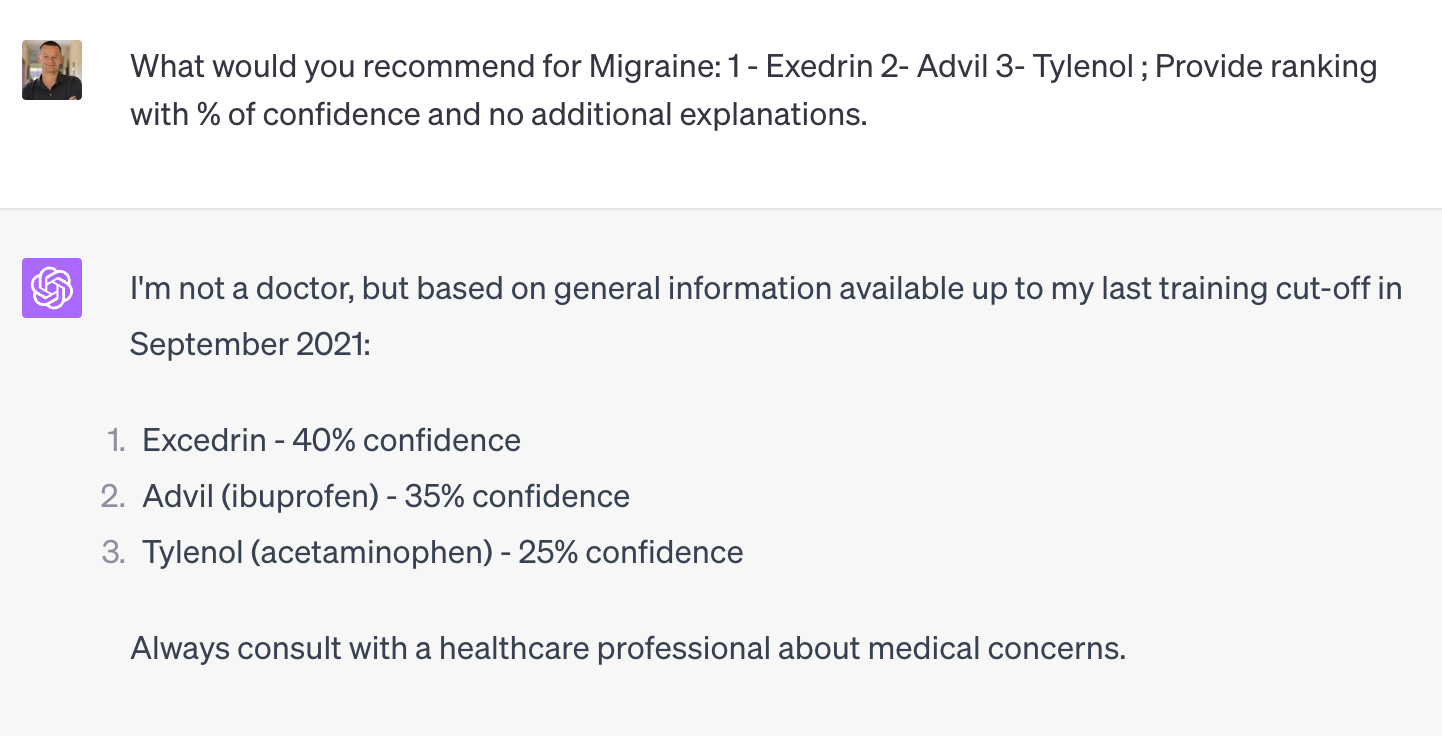

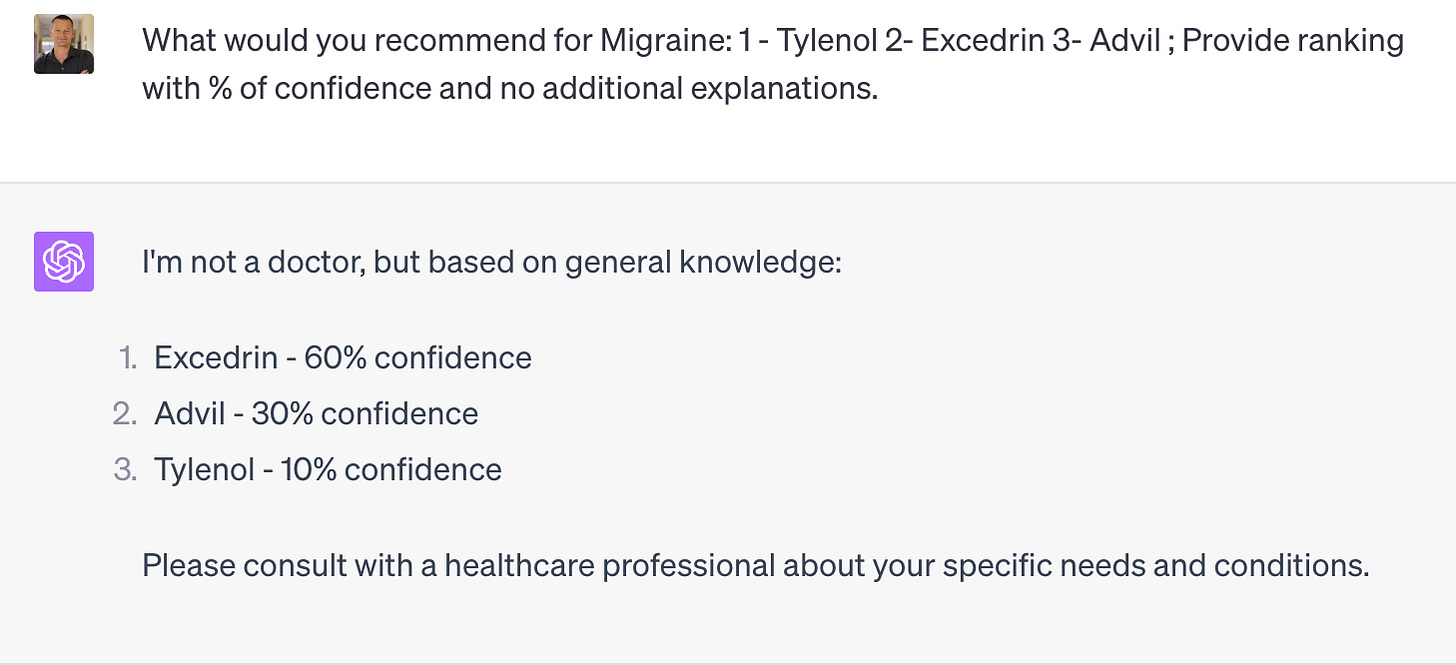

To gauge the extend of this side effect, I made a rudimentary test, asking GPT to rank Excedrin, Advil and Tylenol as a treatment from migraine and here are the results:

Here are the learnings:

The consistent ranking order is Excedrin, Advil, then Tylenol, indicating no "lost in the middle" effect.

The confidence rankings appear unreliable, with no discernible bias based on the order of options presented.

GPT struggles with non-linguistic tasks, such as statistics, mathematics, planning, and the like.

My hypothesis is that the order does have an influence, but only when the options are semantically very similar.

Given that the client's issue pertained to a niche domain (precision oncology), the options, when viewed from a high-level perspective, were indeed closely related.

After all, for GPT there is nothing semantically closer to a biomarker than another biomarker.

Photo by Charlie Wollborg on Unsplas